Developer's Guide to Creating Talking Menus for Set-top Boxes and DVDs

Best Practices for Talking Menus

Best Practices for Talking Menus

System Operation

The first set of questions in the previous section mainly addresses operational concerns that directly affect the user's ability to easily control the audio navigation system. The second set of questions deals with the user's ability to understand the content of the menus.It may be helpful to think of the two groups in another way. In as much as the first list determines the mechanical aspects of the audio-navigation feature, they can also be thought of as the kind of questions that could be used to shape the behavior of an entire product line or library. It would make sense, for example, if all DVDs released by a particular distributor shared common features regardless of the content or the style of a given title. Likewise, as set-top-box technology evolves and one generation of audio-navigation interface gives way to the next, users would benefit if the founding principles of interface behavior were passed continuously from one software iteration to the next. Answering these questions with a global approach in mind will also help establish a universal grammar for the design of audio interfaces.

On or Off

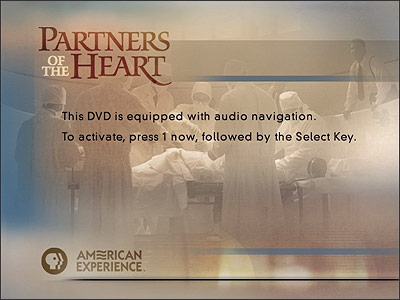

There is perhaps no more important design decision than the one that determines how audio navigation will be enabled or disabled. Obviously, if a user who is blind cannot enable the system, it is useless. But by the same token, if a sighted user cannot disable the system, it might be seen as a liability, despite the fact that many sighted users can derive benefit from an audio-navigation feature. The real issue here is choice: can the system be switched on and off, and how easily? Our solution is to have the insertion of the DVD trigger an audio prompt that instructs the user how to enable the audio-navigation feature, as illustrated in the first screen of the Partners of the Heart DVD.

This ensures that even a first-time user can easily access the audio-navigation feature without help from a sighted friend. Also, the user always has the option of either enabling or disabling audio navigation at any time during operation of the DVD or STB.

Developers should follow these recommendations when designing the enable/disable feature:

- Provide an audio prompt at startup that instructs the user how to enable the audio-navigation feature.

- Include instructions for enabling or disabling the system after the startup sequence has completed (if this is different from the method at startup).

- Do not require the user to choose between turning audio navigation on or off before continuing the startup sequence. Provide a timed pause during which the user may act before defaulting to the audio-disabled setting.

- Assign a single button on the remote control at startup to toggle the enabled / disabled setting, if possible.

- Assign a remote-control sequence for toggling the audio-navigation feature even after startup. (A user who is blind should be able to turn on the audio-navigation system even when a program is in progress. Do not require the user to return to the startup sequence or expect that the user will be able to locate the accessibility settings menu within the DVD or STB menu tree.)

Voice Choice

There are two ways to give voice to an audio-navigation system — a recorded human voice, or a computer-synthesized voice. Each has its distinct strengths and weaknesses.Recorded human voices are in common use today. Many menu-navigation systems use human voices to provide prompts, most notably in telephone-voice-navigation systems. To build a voice-navigation system using a human voice, a person must be cast in the role of the speaker and that person must be recorded pronouncing all of the words or phrases contained in the menu tree. Those recordings are then played in response to user choices.

The advantages of using human voices include:

- They are extremely clear and easy to understand.

- They are compatible with existing technology. Voice samples can be recorded on a DVD or stored in the chip memory of a set-top box without significant modification to hardware.

- The full range of human races, ages, genders and native language speakers are available.

- They are inflexible. To change a menu item, the original speaker must be re-recorded.

- Human voices are more costly. Besides the salary of the speaker and recording costs, issues of rights and residuals may pertain.

- Once the voice has been recorded, it is not easy to change characteristics such as speed and verbosity without recording parallel sets of menus.

The advantages of using synthesized voices include:

- They are very flexible. To change a spoken item, simply change the text provided to the synthesizer.

- The cost of creating a menu is low. There are no talent fees.

- Characteristics such as speed and verbosity can be altered.

- The technology to create very convincing voices remains costly and has not been embedded in DVD players or STBs.

- The technology to create very convincing voices has not yet been embedded in the central servers that feed digital content to the STBs located in subscriber homes.

- Particular languages or voice types might not be available.

Today, digitized human voices are the norm. However, in an ideal world, playback devices would contain the hardware and software required to turn text into convincing human speech. That is not yet the case. However, the technology to create synthesized voices outside of a given DVD player or STB does exist, offering the possibility of a hybrid approach.

For example, DVD designers seeking to reduce costs could create synthesized voices at their own workstations, and then embed them, as they would a human voice, in the audio-navigation feature included on the disk. This would eliminate the cost of the speaker and of the recording studio. It would also allow the developer to change the content of the audio menus as easily as he or she could alter visual menus and other graphical content.

But in so far as most audio-navigation systems will continue to rely for the foreseeable future on recorded human voices, the following recommendations can help smooth the transition when it comes to the exclusive use of synthesized voices.

Developers should follow these recommendations when creating audio-navigation prompts and responses:

- Ensure that recorded human voices are clear and easy to understand.

- Consider using a professional narrator.

- Become acquainted with rights and residuals issues affecting talent costs before casting a speaker.

- Try to cast a speaker who is likely to be available in the future should further recording be necessary.

- Avoid design or coding choices that might impede the eventual change to synthesized voices.

Prompts and Feedback

Interactive quality is the heart of the audio-navigation system. Aside from the basic ability to turn speech on and off, it is the consistency, predictability and ease of use that will make or break a talking menu. Simply put, the better the talking menu can anticipate the user's needs without interfering with the user's ability to choose, the more successful it will be. To that end, the talking menu should behave as follows:- Always announce the screen title when switching to a new screen.

- Always respond to a user choice. When moving from menu item to menu item, the interface should announce each item. When selecting an item from a menu, the interface should acknowledge that choice before taking action.

- Prompt or announce once per user action. Do not endlessly repeat the previous message while waiting for a user to take action.

- Provide an easy-to-use repeat function on the remote control. The user should be able to ask the system to repeat the previous message. Repeat the name of the current screen when repeating the previous message, as a means of preventing the user from becoming lost in the menu tree.

- Make key sequences on the remote control as simple as possible. Try to achieve all necessary functionality using only the arrow keys and the Select key.

- Consider wrapping to the top selection when the user is at the bottom of the menu and presses the down key. When the user is at the top and presses the up key, consider wrapping to the bottom. If wrapping is not possible, give some audio feedback, such as repeating the last menu item.

- Repeat the navigation instructions as often as seems appropriate for the expected use pattern. For example, a DVD that someone might pick up only occasionally should frequently remind the user of what he or she needs to do next. An STB that will be used more often might have both a wordy option, with frequent reminders of how to use it, and a more terse option for those who are familiar with it.

Timing and Global Variables

Some choices faced by developers seem insignificant, but a mistake can easily confuse users. Attention to detail in interface design always pays off. That's why it's important to enumerate several low-level aspects of the audio interface that are easy to overlook but have a real impact on the user's experience.As the user interacts with the navigation function, he or she expects the system to respond to input from the remote control in very specific ways. If these expectations are not met, the user can become anxious and begin to question whether the system is set up properly, whether the remote is working, and so on.

To avoid this distress, developers should take care to make their audio-navigation systems as responsive as possible through the following behaviors:

- Always allow user input from the remote to interrupt spoken messages. Do not force the user to wait for a response until playback of a message has concluded.

- Do not loop messages. Provide a means for the user to request the system to repeat the current location and/or selection.

- Provide a menu choice, on every screen, that allows the user to enter an accessibility preference and settings area. In this area, provide an enable/disable toggle and, if possible, speaking rate and verbosity settings. Also if possible, allow users to save multiple settings, catering to the needs of multiple members of the same household.

- Include short, silent buffer handles at the beginning and end of individual speech files, if necessary. These handles ensure that the spoken item will not be truncated when played for the user. It is important, however, to fine-tune these handles to keep them as brief as possible to make the system as responsive as possible.

Translating the Visual to the Verbal

When the operational foundation of the audio interface — the behaviors outlined above — are well-established and predictable for the user, developers will find that much of the sting of translating visual information to spoken text has been removed from the process. As long as the user knows that certain controls will always elicit predictable responses — and thus enjoys a high degree of confidence that he or she will not become lost within a menu structure — the developer is free to imagine creative ways to organize information in order to capture the intent and feel of a graphic menu system, without feeling confined by its look.As already discussed, people process audio information very differently than they process visual information. The graphic field is two-dimensional — imagine a plane with aspects of height and width. The eye naturally skims freely across the entire visual field, processing both dimensions simultaneously and looking instinctively for intersections between those two dimensions. This is why the eye can take in a grid, such as a program guide, and quickly find the intersection between the time of day and a given channel.

A simple visual list can contain rich contextual information about the relative importance or relationship between items. Text items can be colored, indented or enlarged to suggest those relationships, and all of that information is available to the eye at a glance.

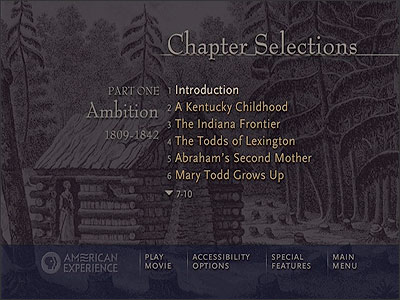

For example, the first chapter-selection screen on the Abraham and Mary Lincoln — A House Divided DVD is a list of eleven items. To the eye, they readily group into two categories of action: the first, seven selections to explore various chapters; the second, four selections at the bottom of the screen that allow the user to switch to different menus, including a return to the main menu. This contextual information is readily available to a sighted user at a glance and it adds, in effect, a second dimension to the list of eleven items.

Herein lies a crucial difference between the visual and auditory fields. Specifically, the auditory field is nearly one-dimensional. Merely reading this list aloud will not help the listener to appreciate the transition from one category to the next. To build a conceptual understanding of the difference between the first seven items and the last four items, the user who is blind will have to pay very careful attention. And it may be unreasonable for a developer to assume that the user will do so.

The problem is easy to identify. Listening is a time-based activity, in which one piece of information follows another at a fixed speed. It is difficult to skim that information or to process more than one auditory stream at a time. There is no auditory equivalent of a list where the items are grouped, either in clumps or arranged in a two-dimensional grid.

In order to mimic visually embedded contextual information in an auditory list, aspects of speech would have to change from one item to the next — timing, volume, emphasis, speed, language and so on. The listener would have to concentrate with great determination in order to connect items based on their spoken attributes, and if the list was long and complex, the listener could easily be overwhelmed.

It is for these reasons that developers seeking to translate graphics to spoken text must learn to separate the information they seek to present in words from the information they seek to communicate through context or other visual cues. Once developers achieve that separation, they can better choose which information must be communicated and which information may well be discarded. Beyond speaking the name of a menu item, developers may choose to add other spoken information to communicate the graphical content that the blind user cannot perceive. But beware: adding too much audio information may only confuse the user. For the user who is blind, the primary goal is to navigate, not to learn to appreciate the visually pleasing graphic.

Visual to Spoken, Step by Step

Menus are lists. The first step in translating a graphic menu into a spoken menu is to determine how it is structured. Is it a linear list? Is it, in fact, a group of sub-lists? Or is it a grid, which is a group of lists that share certain attributes?The second step is to decide whether or not any of the contextual visual information is absolutely necessary to communicate in order for the user to successfully navigate. Do the colors of the words really matter? Does the font size matter? Does the location on the screen matter? The answers to these questions may help determine how many different sub-lists are present on the same screen.

The third step is to create the spoken-menu structure based on the obvious or not-so-obvious list structure embedded in the graphic. For example, imagine a graphic screen with six menu items. What if three of the items are rendered in one color and relate to choice of programming, while the other three are in another color and relate to system preferences? The implication is that this list of six items is actually two lists of three items each. If the audio-navigation system simply allows the user to move from item to item, confusion may set in unless some additional audio cue lets the user know that the six items are actually two groups of three.

The following are guidelines to help developers relay visual contextual information verbally:

- Consider numbering menu items and announcing at the top how many items are in the list. This strategy helps the user remain oriented.

- When changing from one sub-list to another while on the same screen, consider naming the lists, even if those names are not represented on the graphic version of the menu.

- If the graphic is a grid, allow the user to choose the axis of navigation. For example, if the grid is a program guide that presents time of day across the top and channel selections along the left, allow the user to choose to browse a list of programs on a given channel, sorted by time, or a list of programs at a given time, sorted by channel. In other words, help the user find answers to these two distinct questions: "What will be on PBS later tonight?" and "What is on TV right now?"

- Pay less attention to the look, and more attention to the feel of the graphic interface.

- Do not overburden the user with unnecessary information.